Unlock Your Hidden Content: Is Your Website Invisible in the AI Search Revolution?

If you have ever used an accordion, a tabbed menu, or any expandable feature on your website, you have likely wrestled with a critical question: can search engines actually see the content tucked inside? For years, the answer was relatively simple. Today, with the meteoric rise of AI-powered search from platforms like ChatGPT, Perplexity, and Google, the game has fundamentally changed. The technical choices you make about hiding content are no longer minor details—they could be the very thing rendering your most valuable information invisible, costing you authority and future customers.

This isn’t just a technical problem for developers; it’s a strategic business challenge. But it’s one you can master. Understanding how your website presents information to these new AI crawlers is the first step in transforming your site from a potential liability into a predictable growth engine.

The Two Architectures of Hidden Content

When content is collapsed or hidden behind a click, it is generally implemented using one of two foundational methods. The distinction between them is the single most important factor in determining whether AI search engines can find and understand your information.

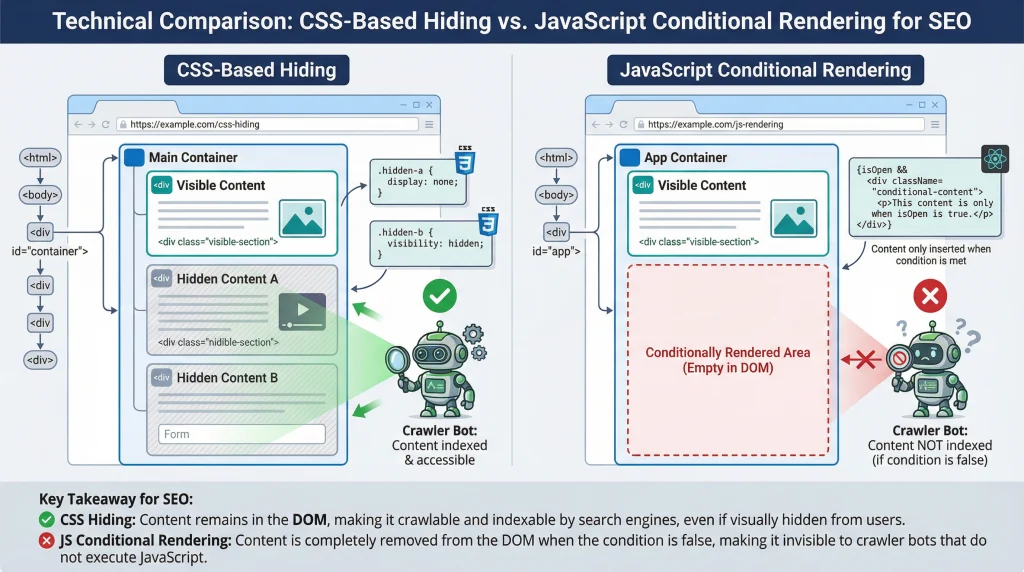

1. CSS-Based Hiding: The Content is Always Present

This is the traditional and most reliable method. The content is fully loaded into the page’s underlying HTML code (the Document Object Model, or DOM), but it is simply made invisible to the user with CSS rules until they click to reveal it. Think of it like having a complete document in a folder; the information is all there, just momentarily out of sight.

Common CSS techniques include:

display: none;visibility: hidden;height: 0; overflow: hidden;

The crucial takeaway is that the content exists in the HTML source code from the moment the page loads. Any crawler, from a simple scraper to the sophisticated Googlebot, can read it because it’s part of the page’s permanent structure [1].

2. JavaScript Conditional Rendering: The Content is Created on Demand

Modern JavaScript frameworks like React, Vue, and Angular often use a more dynamic approach. Instead of loading the content and hiding it with CSS, they generate the HTML itself only when a user interacts with the page.

Consider a typical React component for an accordion:

function Accordion({ isOpen, content }) {

return (

<div>

{isOpen && <div className="panel">{content}</div>}

</div>

);

}When isOpen is false, the panel with your content doesn’t just become invisible—it doesn’t exist at all. It is not in the DOM and not in the source code. The JavaScript creates it instantly, but only after the user clicks. This is like having a blank piece of paper and only writing the information down after someone asks to see it.

Why This Is a Game-Changer for AI Search

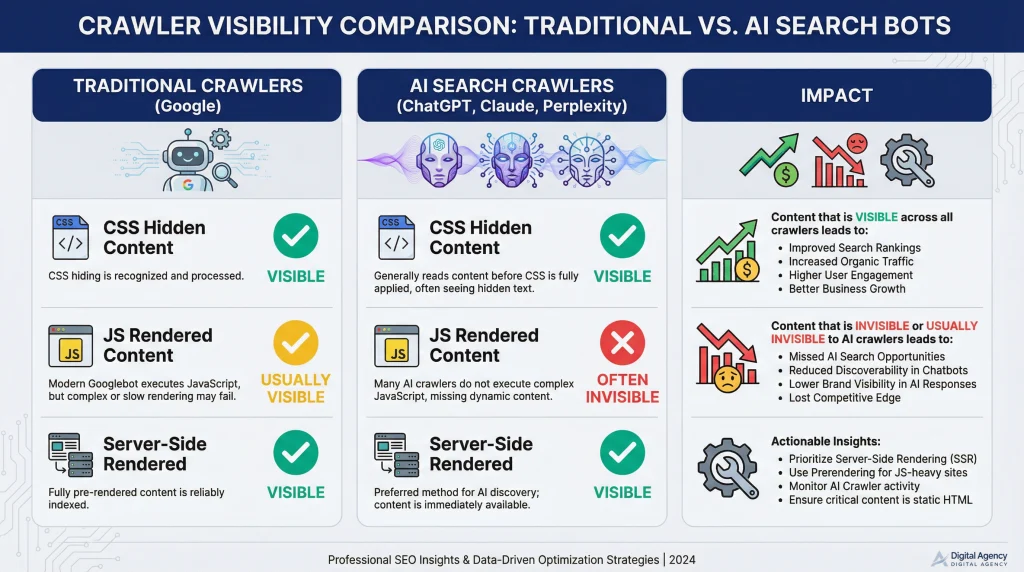

For years, the web has optimized for Google’s powerful crawler, Googlebot, which has become increasingly proficient at processing JavaScript and indexing dynamically rendered content [2]. However, the new wave of AI crawlers operates on a completely different principle.

AI models from major players like OpenAI (powering ChatGPT), Anthropic (Claude), and Perplexity are built for breathtaking speed and efficiency. Their crawlers often prioritize fetching the raw, initial HTML of a page and moving on. They do not typically pause to run a full browser environment, execute complex JavaScript, and wait for your website to build itself.

This means if your essential product details, critical FAQs, or in-depth service descriptions are hidden behind JavaScript conditional rendering, these AI crawlers will never see them. To them, your content is a blank page.

A recent, comprehensive study by Vercel confirmed this behavior, finding that the crawlers for OpenAI, Anthropic, Meta, ByteDance, and Perplexity do not render JavaScript [3]. While they may download the script files, they do not execute them to see the final, user-facing content.

At a Glance: How Crawlers See Your Content

| Content Hiding Method | Traditional Crawlers (Google) | AI Search & Scrapers (ChatGPT, etc.) | Why It Matters for Your Business |

|---|---|---|---|

| CSS Hidden | ✅ Visible | ✅ Visible | Your content is discoverable by all search engines, maximizing your reach. |

| JS Conditional Rendering | ✅ Usually Visible | ❌ Often Invisible | You risk being completely invisible to a rapidly growing segment of search traffic. |

| Server-Side Rendered | ✅ Visible | ✅ Visible | Your content is delivered fully-formed, ensuring universal visibility and peak performance. |

Four Proven Strategies to Ensure Your Content Is Seen

If discoverability is your goal—and it should be—you must ensure your content is present in the initial HTML payload. This doesn’t mean sacrificing a dynamic user experience. It means choosing a technical strategy that delivers a complete, fully-formed page to every crawler, every time. Here are your best options, explained in plain English.

1. The Simple Fix: Prioritize CSS-Based Hiding

For most common uses like accordions and tabs, the most straightforward and powerful solution is to stick with CSS-based hiding. This proven method ensures your content is always in the DOM, making it readable by any crawler, regardless of its JavaScript capabilities. You can still create beautiful, smooth animations; you just build them on a foundation of universally accessible HTML.

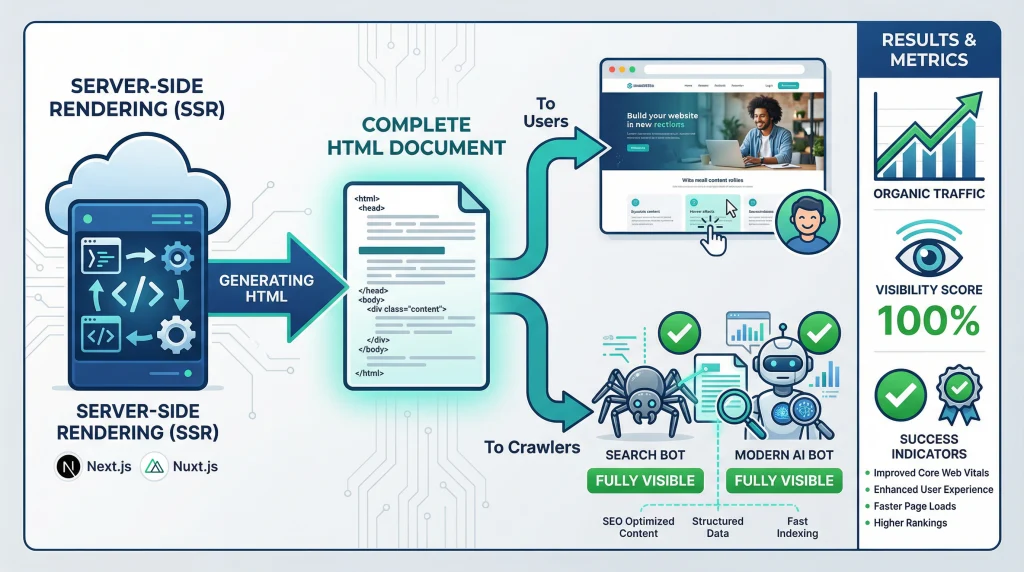

2. The Modern Standard: Embrace Server-Side Rendering (SSR)

Frameworks like Next.js for React and Nuxt for Vue offer a transformative solution out of the box: server-side rendering. With SSR, your server generates the complete HTML for a page—including all the content inside your accordions and tabs—before sending it to the browser or crawler [4]. This method delivers a fully-formed, instantly readable document, providing the best of both worlds: a rich, interactive experience for users and perfect indexability for all search engines.

3. The Performance Play: Use Static Site Generation (SSG)

If your content doesn’t change in real-time (e.g., blog posts, documentation, marketing pages), static site generation is an incredibly powerful strategy. Tools like Next.js, Nuxt, and Astro pre-render every page of your site into static HTML files at build time. The result is a website that is blazingly fast and completely transparent to all crawlers, as no JavaScript execution is needed to view the content. This is an excellent method for establishing topical authority and delivering a superior user experience.

4. The Specialized Solution: Implement Dynamic Rendering

For highly complex web applications where full SSR isn’t feasible, dynamic rendering offers a targeted workaround. This technique involves detecting who is visiting your site. When it detects a real user, it serves the normal, JavaScript-heavy version of the page. But when it identifies a known crawler user-agent (like Googlebot or ChatGPT-User), it serves a pre-rendered, static HTML version of the page [5]. Services like Prerender.io can automate this process, providing a critical bridge for legacy applications.

The Bottom Line: Transform Your Visibility

The way you build your website has profound and lasting implications for how—and if—your content is discovered. As AI-powered search continues its rapid evolution to become a dominant force online, relying solely on client-side JavaScript rendering is no longer a viable strategy. It is an unnecessary and increasingly dangerous risk.

The safest, most powerful approach is to guarantee your content exists in the initial HTML your server delivers. Whether you achieve this by using CSS to hide content that is already in the DOM, adopting server-side rendering, or generating static pages, the goal is the same: give every crawler—from the simplest to the most advanced—unfettered access to the valuable content you want them to find.

Your accordion might look the same to a user either way. But to the growing ecosystem of AI tools that are actively shaping the future of search, the difference is everything.

References

[1] Google Search Central Community. (2022, October 26). Is content with display none read by search engines? [Online discussion]. Retrieved from https://support.google.com/webmasters/thread/185809376/is-content-with-display-none-read-by-search-engines

[2] Pollitt, H. (2026, January 8). Ask An SEO: Can AI Systems & LLMs Render JavaScript To Read ‘Hidden’ Content? Search Engine Journal. Retrieved from https://www.searchenginejournal.com/ask-an-seo-can-ai-systems-llms-render-javascript-to-read-hidden-content/563731/

[3] Vercel. (2024, December 17). The rise of the AI crawler. Vercel Blog. Retrieved from https://vercel.com/blog/the-rise-of-the-ai-crawler

[4] SolutionsHub. (2025, September 24). What is Server-Side Rendering: Pros and Cons. EPAM. Retrieved from https://solutionshub.epam.com/blog/post/what-is-server-side-rendering

[5] Google. (n.d.). Dynamic Rendering as a workaround. Google Search Central. Retrieved from https://developers.google.com/search/docs/crawling-indexing/javascript/dynamic-rendering

0 Comments